CHALLENGES DURING THE PANDEMIC

The global pandemic has caused unforeseen disturbances within established business models. Most firms now face a myriad of unprecedented challenges, including frequent changes in rules and regulations, volatile economic conditions, rapid shifts in customer behavior, decreasing effectiveness of sales channels, and disruptions in supply chain. Businesses need to realign themselves with emerging market trends, such as rapid growth in omni-channel customer experience and rise of contactless offerings replacing physically-shared services.

New opportunities emerge as the world navigates its way through pandemic-fueled uncertainty. As a result, many incumbents will lose their way, while new players will emerge across industries. As with any business disruption, success is highly correlated with the speed and agility with which decision-makers respond.

Data-driven insights have become an essential compass to navigate these uncharted territories. Even as companies look to optimize costs to hedge against the current downturn, most businesses are ramping up their investments in data infrastructure and analytics.

Analytics solutions designed and calibrated in prior economic environments will not be equally effective in the post-pandemic world.

All three drivers for successful analytics solutions — A) Underlying Data, B) Modeling Techniques, and C) Resources — have been deeply impacted by the pandemic.

Subsequently, businesses face the challenge of large-scale recalibration and redesign of analytical capabilities.

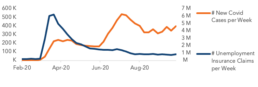

Exhibit 1: Pandemic’s Impact on Analytics Solutions

This paper will take a deeper look at the factors causing disruptions to the typical analytical solution design process and our recommendations for the new normal. It also includes a few examples of how different modeling techniques have become more effective in the new environment.

A) UNDERLYING DATA

Impact of the Pandemic:

1. Unavailability of reliable data: A critical requirement for robust and effective modeling is the availability of trustworthy historical data. Insights from pre-pandemic times will not have the same predictive power within the realm of this unique operating environment. Even data from the early days of the pandemic is not relevant for use in later months of the crisis as the patterns shift globally.

2. Delay in critical information: Macroeconomic indicators are typically the primary drivers for most time-series models including revenue forecasting or budget estimation exercises. Indicators, such as the unemployment rate, inflation rate, GDP growth rate, etc. are reported on a quarterly or annual basis, which is “too late” in the current volatile and shifting environment. This time-lag in the critical inputs can significantly impact the efficacy of the proposed solutions.

3. Inability to draw parallels to previous downturns: Even data from historic stress scenarios is not directly useful, given the novelty and unique nature of the current situation. E.g., frameworks designed on the 2008/09 global financial crisis might have used housing price as a leading indicator of economic health. However, this does not hold true in the current situation. These days, even stock market indices, which are typically considered robust indicators of economic health, are driven largely by investor sentiment than actual business activity.

The Way Forward:

1. Rethinking data transformations: As traditional indicators used in analytics frameworks lose their predictive power, fresh ideas are needed to design new derivations and transformations of existing data to better align models to the external environment. As an example, monthly or quarterly reductions in customer engagement metrics were typically seen as more effective indicators of customer attrition risk compared to weekly rates. Leveraging trends across shorter time-windows increased false positive rates. However, increased volatility and radical changes in customer dynamics in recent months have shifted the balance towards transformations with shorter time-windows. Insights derived from monthly or quarterly results, would be outdated and ineffective for many business activities in today’s world.

2. Alternative data sources: Sources of information that may not have been widely used as indicators in a pre-COVID world, might be more powerful. Data sources that are published more frequently can be used as substitutes to traditional sources. For example, weekly unemployment insurance claims can be used as a proxy for unemployment rate. Other emerging trends in the industry include:

- Increased digital touch in all spheres of customer interactions makes insights derived from digital sources extremely valuable. For instance, product engagement data from mobile and web-based applications are being used to derive actionable marketing intelligence such as personalized offers, and product feature enhancements. Social media data is used to rethink brand positioning and measure customer satisfaction / NPS.

- As the severity of the pandemic shifts across the globe thereby adjusting people’s habits, data-scientists have begun to look for innovative proxies for business activities by region. E.g.: Google’s mobility reports, OpenTable reservation data, etc. have been leveraged by firms as indicators of economic activity in different regions.

Exhibit 2: Sample Google Community Mobility Report

The reports contain percentage drop in visitor counts across different public places by county (from baseline)

3. Leveraging newly-built data solutions: Many technology companies have created data mining solutions and released them to the general public as goodwill. These databases are updated daily and can be seamlessly integrated with all standard analytics tools.

Examples include:

- Tableau, a product owned by Salesforce, is providing free data resources and training to quickly integrate data from WHO / CDC.

- White House Office of Science and Technology Policy (OSTP), has partnered with technology firms, including Microsoft, to create a COVID-19 impact data repository.

- Power of the crowd has enabled the development of open-source data libraries for COVID-19 within standard analytical tools like R, Python, etc.

Exhibit 3: Example of leveraging public data libraries with real time COVID statistics

A wealth of real-time market information has been made available for public use and can provide valuable business insights

B) MODELING TECHNIQUES

Impact of the Pandemic:

1. Limitations of current analytical solutions: The majority of analytical solutions in existence were customized to provide superior business intelligence in normal economic operating conditions. However, these solutions were not designed for the kind of black swan event currently reshaping the economy. A large-scale model revalidation and recalibration operation is underway across most data-science teams. As an example, in order to capture the effects of minor changes in macro trends (e.g., annual growth in GDP / population / interest rates etc.), power or exponential functions were often applied to magnify the effects. Drastic shifts in the economy, as often witnessed during the pandemic, make the insights derived from these solutions counterintuitive.

2. Rising complexity of algorithms: A rapid increase in computing power and increasing accessibility to clean, structured data has driven analytics professionals to deploy elaborate statistical techniques to power most business decisions. Today’s models are bespoke and leverage vast amounts of historical data and multiple mathematical concepts to drive superior performance. Due to the complexity and intricate interlinkages of multiple algorithms, recalibrating these behemoth models takes considerable time and effort.

As an example, highly sophisticated algorithms form the core competency for many trading firms to generate revenue out of regular market movements. In April 2020, when the price of oil futures dropped below zero, many of their quantitative trading models stopped working, as they were not designed to operate in such conditions. Consequently, many firms booked heavy losses and spent considerable time and effort re-calibrating and rebuilding the wiring of their complex models.

The Way Forward

1. Speed & agility: Even a perfect model, with comprehensive sensitivity and scenario analysis, will not yield sustained stable performance in such a volatile environment. Constant monitoring of the model health, frequent recalibrations and inclusion of new data sources would be a common theme in the days to come. Marrying new data with historical models requires more specialized methodologies (e.g., overlays), compared to standard use of historical data. Customized solutions, combining multiple mathematical theories, typically take more time and effort to recalibrate. The focus for business analytics teams should shift towards quick, iterative, and easily-deployable solutions that can be quickly realigned with the changes to the external climate.

2. Leveraging open-source solutions: An easy way to optimize the time and effort requited to build analytics solutions, is to rely on open-source AI / machine learning algorithms. These algorithms are available for modifications and improvements to the public and are continuously optimized for performance. Thus, they provide businesses with cutting-edge methodologies at almost no cost. Most of these algorithms have proven success across multiple functions and industries, and more importantly, can be calibrated and redesigned very quickly to keep up with exogenous changes in business conditions.

Exhibit 4: Open-source operating process

3. Using the Right Tool: Artificial Intelligence and Machine Learning power everyday business decision-making and provide competitive advantages in today’s business landscape. A plethora of open-source algorithms are available to data scientists today, and fitting algorithms to datasets has become simpler than ever before. The missing link in successful delivery of most analytic solutions is the ability to choose the most appropriate algorithm and statistical concept for each cause.

In today’s uncertain times, increased volatility and disruption to standard operating environments have made this task even more complex.

Many examples can be drawn upon to illustrate how the pandemic has perturbed the standard model development process and how different techniques have become more relevant.

A. Dynamic Customer Segmentation

Portfolio segmentation is a precursor to every analytical exercise, with an established process of combining business experience and standard statistical techniques. Typically, data scientists try to fit different algorithms and calibrate models with new data through a static segmentation, however, a more agile approach to segmentation is needed for an effective analytics framework under the current circumstances.

The intensity of COVID-19 varies by industry and geography, as different regulations are imposed. Moreover, customers are changing their behaviors more frequently now than ever before. Unsupervised machine learning algorithms, such as K-Means, Apriori and DBSCAN, provide quick clustering insights and have become an integral part to any learning or prediction engine. Many businesses are also trying to shift towards neural-networks-based solutions on account of their distinctive superiority in targeting prospects within a large database.

Exhibit 5: Illustration of how a customer population should be dynamically segmented, as trends change over time

B. Consideration of a Larger Set of Predictors

Past successes and years of business experience have typically provided model developers with a handful of predictive variables to start with. In many cases, dimension reduction exercises did not make a significant difference and were often ignored.

The uncertainty fueled by the pandemic is now forcing data scientists to come up with new data sources and innovative transformations. Prior experience is less effective, and a wider list of predictive variables is needed at the start to capture indicators with highest predictive power in the current environment. Thus, many statistical techniques for dimension reduction like LDA (Linear Discriminant Analysis) or PCA (Principal Component Analysis) can be used to derive optimized and powerful predictor lists from large consideration sets.

As an example, in prioritizing customers for a relationship-check campaign, in addition to the standard variables used (such as spends, customer value, contract status, etc.), digital engagement and social media behavior metrics need to be included in the current environment. Different transformations can then be derived from these new inputs, as model developers test and learn the respective predictive powers. This process would significantly increase the number of variables under consideration, potentially making the models bulky. An initial dimension reduction exercise can speed up both the initial model development process, as well as future recalibration efforts.

C. Increased Complexity and Inter-dependency of Variables

Macroeconomic indicators form a key component of all revenue forecasting, capital buffer computations, and regulatory modelling exercises. As with any economic disruption, macro-indicators like unemployment rate and housing price index show much stronger correlation than usual.

A fundamental reason for the failure of the valuation models of MBS (Mortgage-Backed Securities) and CDS (Credit Default Swaps) in the 2008-09 crisis was the incorrect assumption of risk factor independence. The theory holds in normal economic circumstances, but risk factors show strong inter-dependency in times of crisis.

Independence of predictor variables is a fundamental assumption in any regression-based model. This includes widely-used times-series modeling techniques such as ARIMA. Many of these frameworks suffer from multi-collinearity issues during turbulent economic times. Decision tree models, including CART, random forests, XGBoost / Cat Boost, etc. are more effective in economic downturns, as they capture non-linear, complex relationships in data and do not suffer from multi-collinearity issues.

As businesses look to stay ahead of the curve through analytical superiority, applying the most appropriate framework for specific problems will be the key to differentiated success. Notably, stewardship from experienced analytical professionals, in tandem with innovative thinking, will allow for design of powerful frameworks, tailor-made for today’s environment with quick turnaround times.

C) RESOURCES

Impact of the Pandemic:

1. Increased difficulty of designing new solutions: The process of establishing an end-to-end analytics solution in production often takes quarters to years. Given the current uncertainty and limited availability of relevant historical data, these timelines have been stretched further. At the same time, business leaders require more frequent and high-precision intelligence to thrive in the current dynamic environment. Most data science teams are not trained to design and develop models with the agility demanded by the current dynamic situation.

2. Pressure to recalibrate existing models: In addition to the regular pipeline of work, data science teams are now facing the additional workload of off-cycle recalibration of multiple existing models. The increased volatility in the economic climate also calls for multiple updates to financial forecasts and increased frequency of model monitoring. Furthermore, the demand for economic analysis and reports on business performance has increased significantly amongst leadership due to the constant swings in customer behavior. Thus, everyday life for analytics professionals now consists of a plethora of requests, coming from different parts of the organization with shorter turnaround times.

3. Work-from-home constraints: Data privacy and security concerns have forced data-science teams to work as closely knit units behind closed doors. Remote working breaks down customary team workflows, forcing people to spend more time in meetings for collaboration, thereby reducing available time for individual tasks. In addition, data scientists need enhanced computing power and superior internet connectivity to extract data, work through firewalls, and access shared software and platforms with limited IT support.

The Way Forward:

1. Aligning analytics with business priorities: Many businesses stood up rapid-response analytics teams to address the overnight impact of COVID-19 and redesign data-driven solutions for the future. However, lack of understanding of the business priorities and ineffective communication of the critical issues led to the design of multiple redundant solutions. As business leaders continuously refresh their strategy to steer the firm through the pandemic, effective communication channels must be established within analytics functions to ensure alignment with the broader strategy.

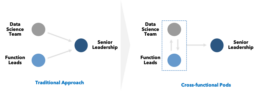

2. Tighter cross-functional collaboration: It is not uncommon for data science teams to build analytics solutions and then socialize with business partners for implementation. This operating model creates a time-lag for the solution to reflect the rapidly changing business environment. The ‘on-the-ground’ teams such as sales, marketing, service, etc. typically have a real-time and realistic view of the market conditions. Hence, now more than ever, business leaders and data scientists need to partner earlier and more closely for the analytics engine to reflect on-the-ground reality.

Exhibit 6: Recommended Cross-Functional Analytics Design

3. Breaking siloes within data-science functions: In the industry, virtual barriers separate different analytical functions to ensure independence across processes. As the pandemic has introduced multiple common requirements across teams, such as data gathering and baseline setup, the existence of functional siloes creates considerable duplication of efforts. Common portals across functions for sharing non-sensitive yet critical information, can maximize efficiency of analytics resources at an enterprise level.

4. Centralized model management: Not all analytical solutions deployed within a business are equally impacted by the pandemic. The traditional hierarchy of model criticality has been upended and will keep changing based on external circumstances. Inefficiency is created if individual teams have the responsibility of monitoring the performance of the respective models at short intervals. It is therefore advisable to assemble a cross-functional crisis-response team in order to monitor the health of the entire suite of models and flag concerns to the respective teams as needed. This allows data scientists to focus on their core competence and increase overall efficiency.

IN CLOSING…

The COVID-19 pandemic and subsequent lockdowns have led to a drastic increase in overall uncertainty for businesses. As with any disruption, ability to adapt to changing environments will dictate which businesses lose market share, and which will thrive in the years to come. Analytics solutions form a core part of business intelligence in the 21st century and will play a key role in the global economic recovery. For these solutions to achieve their full potential, a concerted effort is needed to realign them to today’s market forces and increased volatility.

As firms strive to update their analytics engines and solutions to respond to the new business environment, large-scale redesigns and recalibrations are needed. In this unprecedented and unknown territory, speed and agility have become paramount, more so than ever before. A well-coordinated and synchronized approach is needed to be successful in this effort, and involvement of business leadership in the process is critical. Partnerships with technology players, cloud computing providers, and business consultants will play a critical role, as they bring in valuable expertise in redesigning and structuring the most appropriate analytical solutions for a business. This holistic approach towards analytics will enable businesses to successfully navigate uncharted territories of the “new normal”.